Step-by-Step Guide to Create AI Art: From Concept to Masterpiece

Step-by-Step Guide to Create AI Art: From Concept to Masterpiece

Creating AI art begins with a clear vision or concept you'd like to visualize. Start by brainstorming ideas and refining your prompt with descriptive language—details like mood, lighting, artistic style, and composition can significantly influence the output. Tools such as MidJourney and DreamStudio by Stability AI allow users to generate high-quality images from text descriptions, making it easier than ever to turn abstract thoughts into visual form.

Once you've crafted your prompt, experiment with different settings and parameters to see how variations affect the result. Many platforms offer controls for aspect ratio, stylization strength, and model versions, giving you creative flexibility. Don’t be afraid to iterate—AI art often improves through multiple rounds of refinement, where you adjust keywords or add artistic references like “in the style of Van Gogh” or “cyberpunk cityscape at night.”

After generating a base image, consider using post-processing techniques in programs like Adobe Photoshop or the open-source alternative GIMP to enhance colors, fix inconsistencies, or blend multiple outputs. Some artists also combine AI-generated elements with traditional digital painting for a more personalized touch. This hybrid approach allows for greater control while still leveraging the speed and creativity of AI.

Finally, always review ethical considerations when creating and sharing AI art. Be mindful of copyright implications and the origins of training data. Platforms like DALL·E by OpenAI provide guidelines on acceptable use, and understanding these helps ensure your work respects both legal standards and artistic communities. With practice, AI becomes not just a tool, but a collaborative partner in your creative journey.

Step 1: Define Your Concept and Vision

Every compelling artwork, whether traditional or AI-generated, stems from a well-defined concept. Before diving into tools or techniques, take a moment to reflect on the core message or emotion you wish to express. Is your vision rooted in nostalgia, wonder, or perhaps dystopian realism? Clarifying these intentions early helps shape every subsequent decision, from subject matter to stylistic choices. Resources like Art Fund offer insights into how themes and emotions have driven artistic movements throughout history.

Once you’ve identified your central theme, begin outlining specific visual components. A futuristic cityscape might call for neon hues, sharp angles, and dramatic contrasts, while a mystical forest could thrive on soft lighting, lush greens, and ethereal glows. Consider creating a brief mood board or list that includes your desired color palette, lighting conditions (e.g., golden hour, cyberpunk night), and compositional structure—such as symmetry, rule of thirds, or dynamic diagonals. Websites like Colormind can assist in generating harmonious color schemes based on mood.

These foundational elements not only guide your creative process but also enhance the precision of your prompts when working with generative AI models. The more detailed and intentional your description, the closer the output will align with your original vision. Think of it as giving clear directions to a collaborator who’s eager to bring your imagination to life. For tips on crafting effective prompts, Prompt Engineering provides valuable frameworks used by digital artists worldwide.

Step 2: Craft a Detailed Text Prompt

Creating compelling AI-generated artwork starts with crafting a well-structured and descriptive text prompt. The specificity of your language directly influences the output, guiding the model to generate visuals that align closely with your intended vision. Instead of vague terms like "a nice landscape," opt for rich descriptions that include key elements such as subject, mood, lighting, and composition. For instance, describing "a tranquil mountain lake at sunrise, glassy water reflecting snow-capped peaks, golden light filtering through pine trees, in the style of Bob Ross" gives the AI clear artistic direction.

Equally important is defining the artistic style or genre you're aiming for. Whether you prefer photorealism, impressionism, or a cyberpunk aesthetic, naming the style helps anchor the visual outcome. Referencing known artists or studios—like mentioning "Makoto Shinkai’s weather effects" or "Moebius-inspired character design"—can further refine results by leveraging recognizable visual languages. These references act as shorthand for complex aesthetics, making it easier for the AI to interpret and apply them accurately.

Don't overlook environmental details such as time of day, weather conditions, or atmospheric effects. Elements like fog, rain, or neon-lit streets at night can dramatically alter the mood and depth of the generated image. Including spatial cues—such as "wide-angle view," "close-up portrait," or "aerial perspective"—also helps shape the composition. The more sensory and contextual information you provide, the richer and more intentional the artwork becomes.

Ultimately, prompt engineering is both an art and a science. Experimentation and iteration lead to better results over time, so keep refining your descriptions based on previous outputs. Resources like Prompt Engineering Guide offer valuable insights into best practices for crafting effective prompts across various AI art platforms. With practice, you'll learn how to balance detail and creativity to consistently produce stunning, on-target visuals.

Step 3: Choose the Right AI Tool

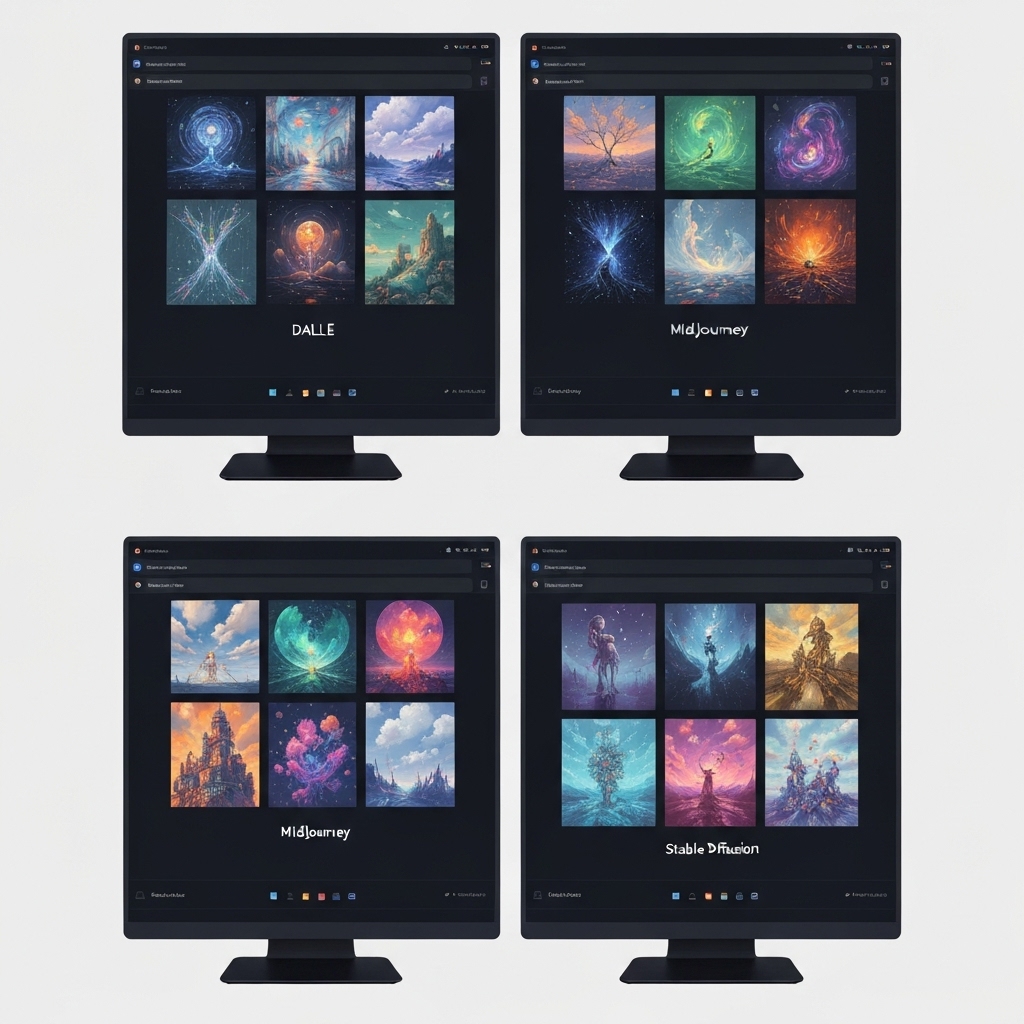

When exploring AI art generation, it's essential to understand the distinct capabilities of leading platforms. DALL·E, developed by OpenAI, stands out for its ability to produce imaginative and surreal images from complex textual prompts. Whether you're visualizing fantastical creatures or abstract concepts, DALL·E interprets prompts with remarkable creativity, making it ideal for conceptual design and storytelling.

For those drawn to visually rich and cinematic aesthetics, MidJourney offers a powerful solution. Widely used in artistic communities, it generates high-resolution images with a painterly or film-inspired quality. Its strength lies in producing cohesive, mood-driven visuals that resonate well in creative industries like entertainment, fashion, and digital art.

Meanwhile, Stable Diffusion, backed by Stability AI, provides unparalleled customization and flexibility. As an open-source model, it appeals to technically proficient users who want full control over their image generation pipeline. With options to run locally and integrate custom models, it supports advanced workflows in research, development, and personalized art creation.

Choosing the right platform depends on your artistic goals and technical expertise. Casual creators may favor DALL·E’s intuitive interface, while artists might lean toward MidJourney’s aesthetic finesse. Developers and power users often prefer Stable Diffusion for its adaptability. Evaluating these strengths helps ensure a better match between tool and vision.

Step 4: Generate and Refine Your Art

Creating compelling visual content often begins with a clear and detailed prompt, but even the most thoughtful input may not yield the perfect image on the first try. After generating your initial image, take a step back and assess it critically—does it align with your intended mood, composition, and subject matter? Pay attention to nuances like lighting, perspective, and proportions, as these elements greatly influence the final impact. If discrepancies arise, don’t hesitate to refine your prompt by specifying attributes such as artistic style, color palette, or environmental context to guide the AI more effectively.

Iteration is a powerful tool in digital art creation. Each version brings you closer to your envisioned outcome, allowing for incremental improvements. Many AI platforms support features like image-to-image generation, where you can upload an existing result and adjust the prompt strength to maintain certain elements while evolving others. This method offers greater control, especially when fine-tuning intricate details. For example, if a character’s expression seems off, slightly modifying the prompt with terms like “warm smile” or “intense gaze” can produce markedly different emotional tones.

Inpainting is another valuable technique that enables localized edits without altering the entire image. By masking specific regions—such as a cluttered background or poorly rendered hands—you can instruct the AI to regenerate just those areas with improved coherence. This precision saves time and preserves the aspects of the image that already work well. Platforms like Stability AI provide robust inpainting tools within their interfaces, making it easier to polish visuals efficiently.

To master AI-generated imagery, treat the process as a collaborative dialogue between your creativity and the model’s interpretation. Continuously test variations, document effective keywords, and leverage advanced features strategically. Reputable resources from organizations like OpenAI Research and tutorials on Hugging Face offer insights into prompt engineering and model capabilities, helping you refine your approach with each iteration.

Step 5: Post-Process and Finalize

After generating your base image using AI tools, the next step is refining it in digital editing software such as Adobe Photoshop or the free alternative GIMP. These programs allow for precise control over elements like contrast, color balance, and sharpness, helping to enhance the overall visual impact. Tweaking these settings can transform a flat or lifeless output into a vibrant and dynamic composition that better reflects your artistic vision.

Beyond basic adjustments, this stage opens the door to adding hand-drawn details that infuse originality into the piece. Whether sketching subtle textures, custom lighting effects, or unique character features, these manual additions create a fusion of artificial intelligence and human creativity. Artists often use graphics tablets or styluses to maintain a natural drawing feel, ensuring the final artwork carries a distinct personal signature.

Another powerful technique involves blending multiple AI-generated images into a single composite. By layering different outputs—each with unique strengths—you can construct a more compelling scene than any individual generation might achieve. Tools like layer masks and blending modes in Photoshop or GIMP make it easy to seamlessly merge elements while preserving depth and coherence.

This post-generation refinement process is where AI-assisted art truly becomes digital art. It’s not just about correcting imperfections but enhancing storytelling, mood, and technical quality. As many artists emphasize, the AI provides a foundation, but the final expression comes from the creator’s intent and craftsmanship—bridging algorithmic possibility with human imagination. For tips on digital compositing, resources like Digital Arts Online offer valuable tutorials and insights.

Conclusion: Unleash Your Creative Potential

Creating AI art is a dynamic process that blends human creativity with the computational power of machine learning. At its core, this collaboration begins with a clear vision—translating an abstract idea into a tangible concept that can be communicated to an AI model through text prompts. The quality of the output often hinges on how precisely the artist conveys elements like style, mood, composition, and subject matter. Platforms such as DALL·E and Stability AI's Stable Diffusion empower users to generate diverse visuals by interpreting these detailed instructions, turning words into compelling imagery.

Prompt engineering plays a crucial role in this creative workflow. A well-crafted prompt might include descriptors such as "a surreal landscape at sunset, painted in the style of Studio Ghibli, vibrant colors, soft lighting," guiding the AI toward a specific aesthetic. Iteration is key—artists often generate multiple variations, adjusting keywords or parameters to refine the results. Tools like MidJourney offer intuitive interfaces for exploring these iterations, enabling users to evolve their vision through successive generations.

Choosing the right platform depends on the desired outcome, technical comfort, and artistic goals. Some tools cater to photorealism, while others excel at fantastical or abstract styles. Many artists combine AI-generated outputs with traditional digital editing in software like Adobe Photoshop or open-source alternatives such as GIMP, blending automated creation with manual refinement. This hybrid approach expands creative possibilities, allowing for greater control over the final piece.

As AI art continues to evolve, the most successful creators are those who remain experimental and open-minded. Exploring new techniques, studying community examples on platforms like CivitAI, and staying informed about advancements in generative models can inspire fresh ideas. Ultimately, AI does not replace the artist—it amplifies their voice, offering a new medium through which imagination can take form in unprecedented ways.